Semantic Kernel Function Calling

Exploring Semantic Kernel Function Calling capability.

Introduction

About a year ago I first explored Semantic Kernel, a tool for orchestrating AI services. I tested the planner capability for executing a sequence of actions based on a natural language prompt. The idea was to create a workflow that fetches some data, transforms it and then does something useful with it. The tool was still in preview and I didn't have a clear use case for it so I left it there.

Recently, I revisited the tool and decided to give it another try as the somewhat complex planner was replaced with function calling feature, which allows the AI model to call functions directly. The functions need to be described to the model along with their parameters and the AI model handles the communication between the user and the function implementation. This is a more LLM-native and efficient approach. The role of Semantic Kernel is to explain the functions to the AI model.

This all sounded quite promising, so I wanted to see if I could build a backend service that offers company data, which I could communicate with using natural language.

Demo project repository can be found on GitHub.

Use case

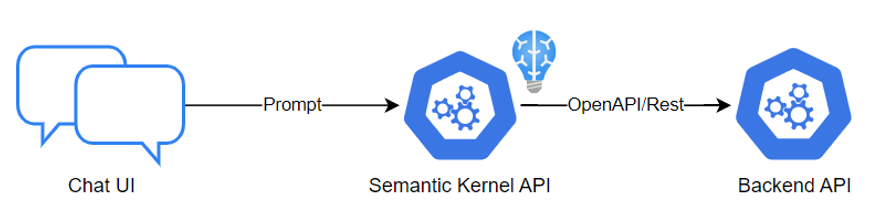

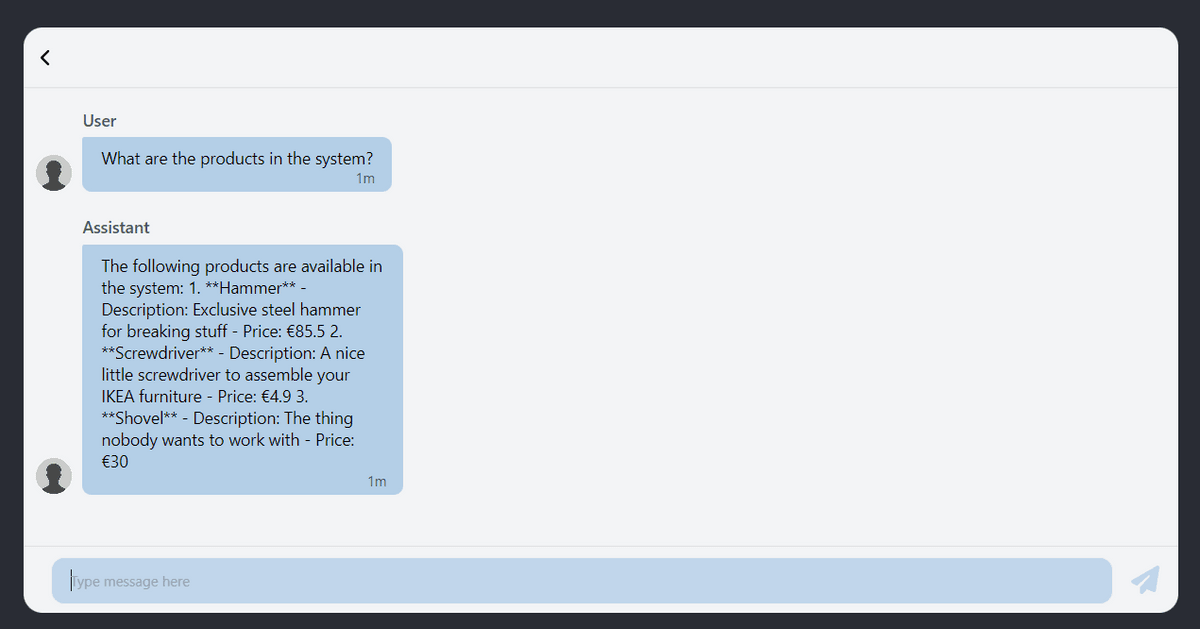

I created a simple demo project including a chat frontend, a Semantic Kernel backend API and a business backend API which holds data about products and endpoints for fetching data and creating an order. The idea is to be able to execute the backend API functionality by communicating with the model using the chat frontend.

Implementation

Backends are implemented with C# and the frontend is a simple React project created using create-react-app.

I'm using Azure OpenAI with gpt-4 model deployed. I wasn't able to make it work with gpt-35-turbo and ended up wasting quite a lot of time and coffee. As the overall quality of gpt-4 is way better along with the recently reduced costs, I wasn't too disappointed in abandoning gpt-35-turbo.

Products API

This is an ASP.NET Core Web API project for products API backend functionality. It's a simple project with an in-memory storage and minimal API endpoints.

The products are managed in ProductsService which acts as a backend for our use case.

internal class ProductsService

{

internal record Product(string Name, string Description, string Unit, double Price);

private Product[] allProducts = [

new Product("Hammer", "Exclusive steel hammer for breaking stuff", "€", 85.5),

new Product("Screwdriver", "A nice little screwdriver to assemble your IKEA furniture", "€", 4.9),

new Product("Shovel", "The thing nobody wants to work with", "€", 30),

];

private readonly ConcurrentDictionary<string, Product> _orderedProducts = new ConcurrentDictionary<string, Product>();

internal Product[] Get()

{

return allProducts;

}

internal bool Order(string name)

{

var product = allProducts.Where(p => p.Name == name).FirstOrDefault();

if (product == null)

{

return false;

}

else

{

return _orderedProducts.TryAdd("user", product!);

}

}

internal Product[] GetOrderedProducts()

{

return _orderedProducts.Values.ToArray();

}

}

The corresponding API endpoints are described in Program.cs. API descriptions and names need to be descriptive as the function calling relies on OpenAPI descriptions when making decisions on the generated function calling plan. Here is a list of tips and tricks from the Semantic Kernel documentation for describing your APIs for the kernel.

app.MapGet("/products", (ProductsService service) =>

{

return service.Get();

})

.WithName("get_all_products")

.WithSummary("Get all products")

.WithDescription("Returns a list of products in the system")

.WithOpenApi();

app.MapPost("/order", (ProductsService service, string body) =>

{

return service.Order(body);

})

.WithName("order_product")

.WithSummary("Order product with name")

.WithDescription("Order a new product with product name")

.WithOpenApi();

app.MapGet("/orders", (ProductsService service) =>

{

return service.GetOrderedProducts();

})

.WithName("get_ordered_products")

.WithSummary("Get ordered products")

.WithDescription("Returns a list of ordered products")

.WithOpenApi();

That's all on the products backend side.

Semantic Kernel Backend API

This is also an ASP.NET Core Web API with Microsoft.SemanticKernel NuGet package installed. At the time of writing, the latest version is 1.16.2. Microsoft.SemanticKernel.Plugins.OpenAPI package is required for the OpenAPI support.

The kernel is created and necessary services attached to it in this project. I created a separate service class for the kernel which is injected as transient as per the documentation suggests. The kernel is supposedly extremely lightweight so this is hopefully not going to be an issue.

public class KernelService

{

private readonly Kernel _kernel;

private readonly OpenAIPromptExecutionSettings _openAIPromptExecutionSettings;

private readonly ChatHistory _chatHistory;

public KernelService(IOptionsMonitor<OpenAISettings> options)

{

// Create kernel instance and add Azure OpenAI chat completion service.

var builder = Kernel.CreateBuilder().AddAzureOpenAIChatCompletion(

options.CurrentValue.DeploymentName,

options.CurrentValue.Endpoint,

options.CurrentValue.ApiKey);

builder.Services.AddLogging(services => services.AddConsole().SetMinimumLevel(LogLevel.Trace));

// Build the kernel.

_kernel = builder.Build();

#pragma warning disable SKEXP0040 // Type is for evaluation purposes only and is subject to change or removal in future updates. Suppress this diagnostic to proceed.

_kernel.ImportPluginFromOpenApiAsync(

pluginName: "products",

uri: new Uri("https://localhost:7194/swagger/v1/swagger.json"),

executionParameters: new OpenApiFunctionExecutionParameters()

{

EnablePayloadNamespacing = true

}

).GetAwaiter().GetResult();

#pragma warning restore SKEXP0040 // Type is for evaluation purposes only and is subject to change or removal in future updates. Suppress this diagnostic to proceed.

_chatHistory = new ChatHistory();

// We want to enable auto invoking functions to be able to automatically utilize backend APIs.

_openAIPromptExecutionSettings = new()

{

ToolCallBehavior = ToolCallBehavior.AutoInvokeKernelFunctions

};

}

public async Task<string> Execute(string message)

{

// Add user prompt to chat history.

_chatHistory.AddMessage(AuthorRole.User, message);

// Create chat completion service instance.

var chatCompletionService = _kernel.Services.GetRequiredService<IChatCompletionService>();

// Get the response from the AI.

var result = await chatCompletionService.GetChatMessageContentAsync(

_chatHistory,

executionSettings: _openAIPromptExecutionSettings,

kernel: _kernel

);

return result.ToString();

}

}

It's worth mentioning that the OpenAPI plugin support is still in evaluation(1.16.2-alpha).

The most important part of creating the kernel is the addition of plugins from an OpenAPI endpoint using ImportPluginFromOpenApiAsync. This is the Products API endpoint. The method optionally accepts a custom HttpClient so, although I didn't have the time to test it, I'd assume any authentication method or set of headers is supported.

Further on we introduce the prompt execution settings and set the ToolCallBehavior to ToolCallBehavior.AutoInvokeKernelFunctions. This is necessary for the kernel to automatically invoke the API endpoints instead of just answering how they could be called or implemented.

The Execute method is the entry point for the chat frontend to communicate with the kernel. The message is added to the chat history and the chat completion service is used to get the response from the AI. The kernel is aware of the plugins related to products so they can be executed automatically.

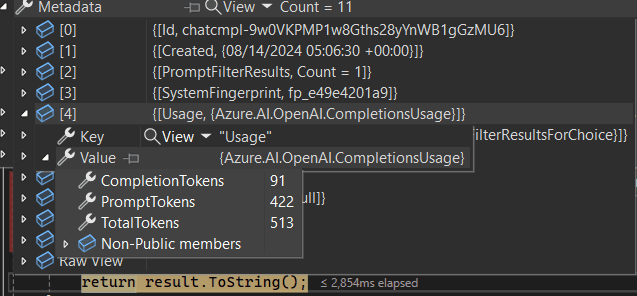

Used tokens can be seen on the chat completion response metadata.

The above image shows used tokens for a single chat request fetching data from Products API. The tokens don't cost much but we'd be quite soon hitting the gpt-4 10K TPM(token-per-minute) quota in a multi-user environment.

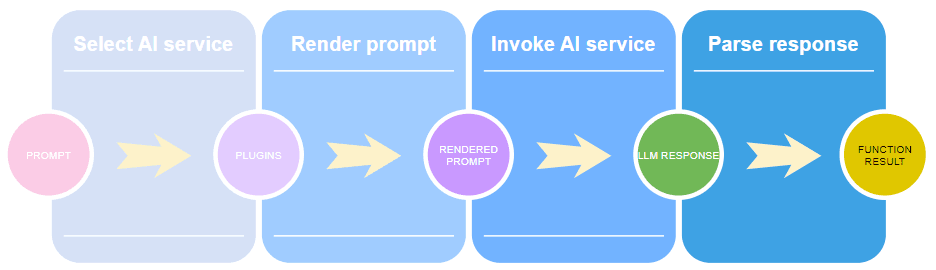

Below is a high-level program flow of the whole process.

Chat UI

The chat UI is a simple React project created with create-react-app. Below is an example command for creating a new project with TypeScript support.

npx create-react-app semantickernelui --template typescript

The chat UI's chat capability is created using MinChat's React Chat UI. The input messages are stored in a state and sent to the backend API and the response is added to the chat messages.

Summary

Function calling using OpenAPI plugins turned out to be way more intuitive and easy than playing around with the planner. And of course you can also create your own native functions for whatever purpose. Semantic Kernel even has a Logic Apps plugin but frankly, that sounds a bit scary.

The actual implementation is so simple that it's almost boring. The OpenAPI descriptions are just plugged in and the kernel and AI model handle the rest. For more accurate results, you might want to add a Persona, or a system message to the model to make it understand the context a bit better.

I can imagine this kind of tool being useful in lots of chatbot scenarios utilizing company data or other publicly available data. That is, if you can affect on the backend API description quality.