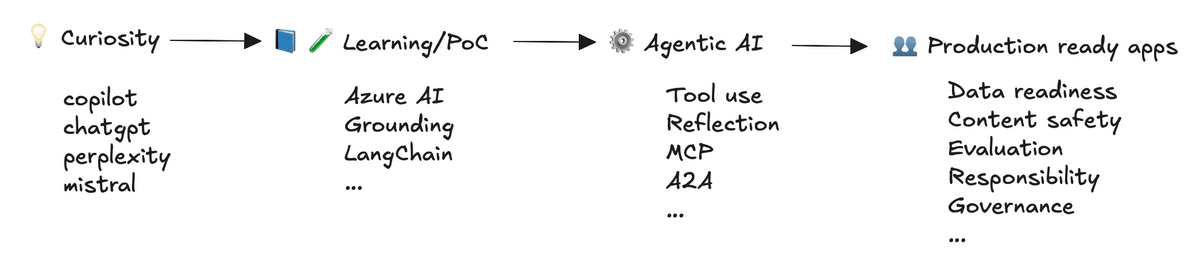

My AI learning journey: From curiosity towards crafting with confidence

My AI learning journey: From curiosity towards crafting with confidence

GitHub Copilot has been quietly assisting me in Visual Studio Code for a few years now—helping with cron expressions, regex patterns and unit tests, while also occasionally hallucinating non‑existent methods and misleading information. Perplexity, Mistral, ChatGPT and Copilot Chat have become part of my toolkit, helping me summarize topics, compare frameworks/technologies and explore new ideas.

As AI models advance at an unprecedented rate, customers expecting AI‑enabled solutions have increased in parallel. It became clear that casual use of these tools wasn't enough. I wanted to understand why they work, how they can be built into real systems and where they could (and shouldn't) be trusted.

This led me from experimenting with surface‑level capabilities to pursuing a structured learning path—starting with Azure AI services, preparing for the AI Fundamentals certification, exploring Agentic AI and testing orchestration frameworks. The goal was simple: to move from curiosity to confidence and to learn how to craft solutions that effectively integrate rapidly evolving AI capabilities without losing sight of reliability or purpose.

AI Foundry for experimentation

I use Azure AI Foundry to explore and evaluate AI models, using my Azure subscription to quickly test popular and open-source models. I try them in the AI Foundry's playground, which is helpful for quick experiments to assess how well each model fits both my technical goals and my budget.

Alongside this, I explored orchestration frameworks such as LangChain and Semantic Kernel (orchestration frameworks/libraries that help manage interactions between LLM(s) and external tools) through hands‑on labs and small proof-of-concept projects. Building PoCs is more rewarding even if it sometimes means lengthy debugging sessions. AI accelerates my Python-based side projects, from building simple neural networks to quick prototyping of solutions using LLMs.

While AI Foundry helped me explore model behavior at a surface level, studying Agentic AI shifted my focus toward how these models could operate autonomously within complex systems.

Agentic AI

AI agents and Model Context Protocols (MCPs, an emerging interoperability framework) are viewed as the next big leap in applied AI—enabling systems that can plan and act intelligently with limited human guidance. Asking an AI for travel recommendations based on preferences is already possible and having it book the flights is not far off, at least in prototype form.

Coming from a background in deterministic systems, designing around probabilistic behavior felt like a major shift. Layers added to simplify development or reduce cost often introduce new complexity—and with LLMs, reproducibility and correctness become moving targets.

When I began the Agentic AI course by Andrew Ng (DeepLearning.AI Agentic AI course), it helped frame LLMs not as end products but as components in a larger system—tools that operate best when given clear objectives, constraints and feedback mechanisms. The key lesson was to design around the probabilistic nature of LLMs, as Agentic systems rely far more on the structured surrounding logic than on model capability.

The key takeaways have been:

- Decompose complex problems into smaller tasks to make evaluation easier

- Use reflection (LLM acts as both creator and critic or use external feedback) to strengthen output quality

- Define explicit rubrics for model judgment—this steadies subjective assessments and reduces bias

PoC: Tool Use and Reflection

After completing the course, I wanted to apply those concepts in a practical setting. I explored a few directions to build a PoC, that could make use of at least part of the techniques/technologies and settled on the one below.

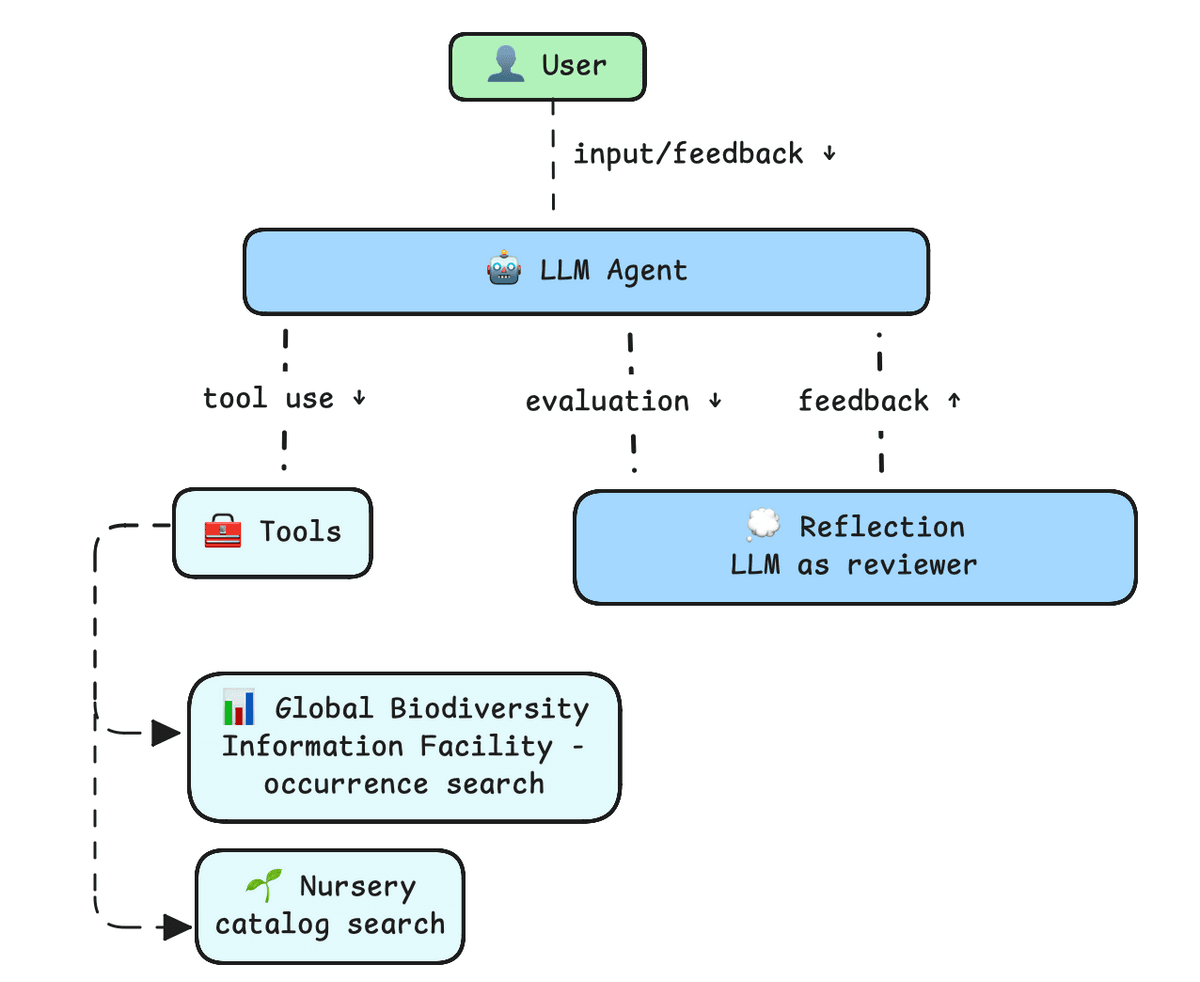

The experiment centered on a simple task: take the scientific name of a plant as input, use AI to determine which insects it supports, whether it occurs in Finland and whether it is available in local nursery catalogs.

To accomplish this, I provided the LLM with two tools:

- GBIF (Global Biodiversity Information Facility) occurrence check, which retrieves GBIF records for a plant species in Finland.

- Catalog search, which looks for matches in local nursery catalogs (PDFs) using RAG-based search.

Each tool was defined in code with a name, description and parameters so the model could reason about when and how to use them. Tool use allows us to have more consistency in areas where LLM lacks intrinsic capability—such as solving mathematical problems, retrieving current information or grounding outputs in a specific dataset.

Example tool definition:

gbif_tool_def = {

"type": "function",

"function": {

"name": "query_gbif_occurrences",

"description": "Get number of GBIF occurrences for a plant species in Finland",

"parameters": {

"type": "object",

"properties": {"plant_name": {"type": "string", "description": "Scientific name of the plant"}},

"required": ["plant_name"]

}

}

}

I chose to keep library usage to a minimum to better understand the underlying process involving tool use.

This is a simple example of how tool use works in practice:

response = client.chat.completions.create(

model="gpt-5.2-chat",

messages=messages,

tools=tools,

max_completion_tokens=16384,

)

choice = response.choices[0]

if choice.message.tool_calls:

messages.append(choice.message)

# LLM is instructed to use both tools before generating the output in the prompt

# code is kept simple based on an assumption that it would behave as expected

for tool_call in choice.message.tool_calls:

if tool_call.function.name == "query_gbif_occurrences":

args = json.loads(tool_call.function.arguments)

result = query_gbif_occurrences(args["plant_name"])

messages.append({"role": "tool", "tool_call_id": tool_call.id, "content": result})

# handle calls to other tools

final_response = client.chat.completions.create(model="gpt-5.2-chat", messages=messages)

Once tool integration was stable, I added a reflection step where the LLM re‑evaluates its own output. Using the same or a different model, it reviews the earlier text and produces a refined version along with structured feedback. This second pass noticeably improved factual accuracy and fluency, even though I wouldn’t claim expertise in entomology.

Here’s a version of a minimal reflection prompt:

prompt = f"""

Review the following article for correctness. Return a JSON object with keys `refined_text` and `feedback` describing corrections and reasons.

Article to review: {text_to_review}

Output format:

{{

"refined_text": "<improved text goes here>",

"feedback": "<reasons for modifying the text>"

}}

"""

Even at this small scale, combining tool use and reflection captured the essence of Agentic AI: connecting structured logic with autonomous reasoning to enhance reliability. It demonstrated that model orchestration and iterative evaluation are not abstract ideas—they’re engineering skills that make AI systems both more traceable and more useful.

Insights gained so far

Building the PoC reinforced how different AI implementation feels compared to traditional software engineering. Even simple prototypes revealed how unpredictable outputs can be and how careful system design and evaluation matter just as much as coding.

While deterministic systems can be validated through unit and integration tests, LLM-based applications require layered evaluation — measuring reliability, factual accuracy and stability across changing conditions. Breaking down large objectives into smaller, verifiable components, defining user expectations and integrating systematic evaluation is crucial.

Using LLMs also introduced new trade-offs. Balancing creativity with grounding, adding guardrails against misuse, and addressing model bias and drift all became part of everyday design thinking. The technology evolves faster than most release cycles, so stability demands deliberate, testable engineering.

Ultimately, success with AI isn’t just about picking the right model—it’s about making thoughtful design decisions, defining boundaries of autonomy and keeping humans in the loop where judgment still matters most. The practical experience of connecting tools, prompts and feedback loops taught me that AI engineering is as much about orchestration and reflection as it is about coding.

Path ahead

The next step is to extend the PoC with new features and experiment with emerging frameworks such as Model Context Protocol, Agent-to-Agent communication, and Azure AI Search. I plan to deepen my understanding of how models are built and fine-tuned, with a stronger focus on evaluation, ethical design and responsible AI usage.

If you are exploring how to apply these ideas in your own work or simply thinking through how AI might fit into your products or processes, this is the kind of problem our team at Rebase enjoys working on, helping turn promising ideas into practical, business-focused solutions. Whether you are just starting to experiment or looking to take an existing approach further, we are always happy to have a conversation and share what we have learned along the way!